Online harassment is a hot topic right now, as Twitter’s perennial battle with trolls heats up, forcing the company to develop new features to combat abuse. Technology companies are scrambling to create solutions that will make their communities safer for users and now Google is taking on the challenge of online harassment as part of its Jigsaw technology incubator. Jigsaw’s engineers and researchers tackle geopolitical problems like attacks on free speech, injustice, corruption, and violent extremism.

Perspective is Jigsaw’s latest project aimed at improving the comment sections of websites, which can become hotbeds of harassment when left unmoderated. It turns out that eliminating the darker aspects of human behavior, especially when combatting those operating under the cloak of online anonymity, has proven to be an exquisite challenge best suited to the bots.

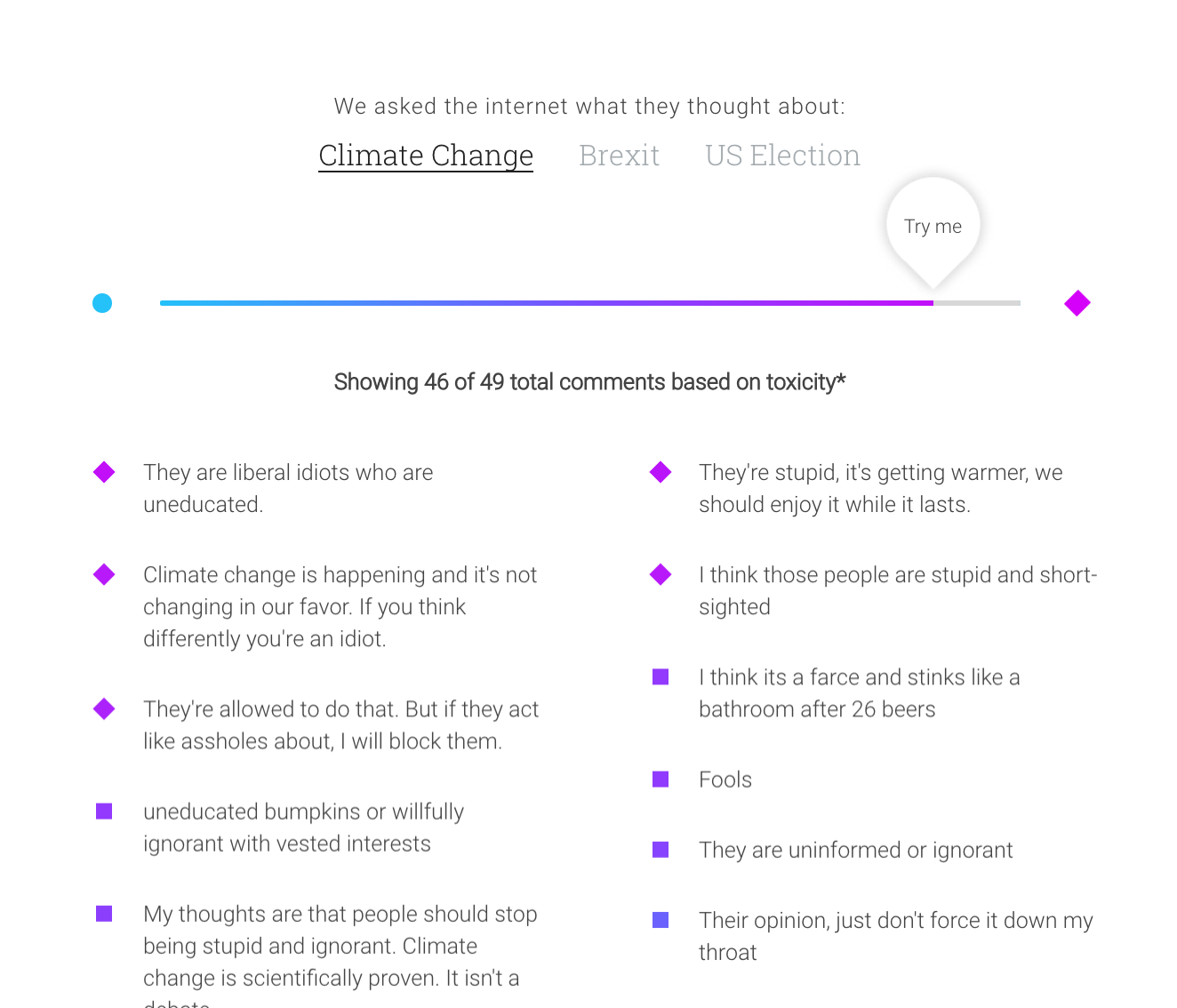

The Perspective project uses machine learning to identify and filter comments for toxicity. Its API scores comments based on “the perceived impact a comment might have on a conversation.” Publishers can then use that information to offer real-time feedback to commenters and speed up moderation. The live demo allows readers to filter the comments based on a sliding scale of toxicity that they are willing to engage.

The Perspective site also includes a Writing Demo that delivers real-time feedback for the toxicity level as you type. The model defines toxic as “a rude, disrespectful, or unreasonable comment that is likely to make you leave a discussion.”

Developers Can Request Access to the Perspective API and Major Publications are Already Experimenting with It

Toxic commenting and trolls are especially rampant on news sites, requiring moderators to be constantly vigilant and ready to neutralize threats to civil discourse. This is why the New York Times employs 14 full-time moderators to manually review the 11,000 comments that come in each day. Despite the efforts of this dedicated team, commenting is only available on 10% of Times articles due to the moderation load.

As a partner on this project, the New York Times open sourced 10 years of moderated comment archives to help the Jigsaw team build the machine learning models that will improve conversations on the web. The publication is currently creating an open source moderation tool to expand community discussion to other areas of the Times.

The Wikimedia Foundation is also collaborating with Jigsaw to develop tools for automating detection of toxic comments and analyzing their impact in discussions at scale. These tools are aimed at mitigating the personal attacks levied at volunteer editors in an effort to improve overall community health.

The Perspective project is still in its early days of research and development, but developers can sign up to request an API key. Google will be open sourcing the experiments, models, and research data gained from testing machine learning as a tool for improving online discussion.

As WordPress powers more than 27% of all websites, a plugin built with the Perspective API could have a major impact on raising the standard of discourse for a large segment of comment-enabled sites. Many publications that might otherwise value thoughtful discussion, have resorted to turning comments off entirely because of the burden of moderation.

Those who rattle off the tired internet maxim that says “Never read the comments” speak to the pervasive toxicity that has invaded online discourse, but they also betray their own fragility in engaging commenters who sabotage discussions with incivility. Readers don’t always have the emotional energy to deal with rude comments that slipped through moderation. While some may find Reddit-style wild west commenting to be spirited and amusing, there are plenty of others who find it demoralizing.

One thing I appreciate about the Perspective project’s demo is that its aim isn’t to edit or change the comments to be less toxic but rather it offers the reader a way to filter based on the individual’s comfort level. With clear warnings in place and a default view set on the safer side, the publication is no longer obligated to overly-censor comments for the lowest threshold of offense.

The Perspective project is experimenting with using machine learning to wrangle the human factor of interacting online. It’s come to the point that moderating comments and weeding out toxicity has become overwhelming for those trying to run a publication. The most encouraging aspect of this experiment is that Google put engineers, designers, and researchers on this problem because comments still matter. This new technology affirms the importance of public discourse on the web and aims to preserve comments as a safe place for conversations. I’m interested to see what WordPress developers can build with the Perspective API once it is available.

Hopefully the API is not based on input from the likes of Politifact, Snopes, Daily Kos, Huffington Post, CNN, etc…

At the end of the day, an API is just code, written by biased humans, which is what Facebook’s “fake news” controversy reminded us of after the recent U.S. presidential election.

Like Sarah’s “Why Comments Still Matter” post in 2014 addressed, sites like CNN.com or Popular Science really just disabled comments because they were tired of being called out on their facts (one of the best blog post’s I’ve seen on the topic, btw).

TL;DR: I doubt this API changes anything, unless it’s just another filter against foul language, or is somehow configurable.