Last week XWP dropped an intriguing preview of a new project called Tide that aims to improve code quality across the WordPress plugin and theme ecosystems. The company has been working with the support of Google, Automattic, and WP Engine, on creating a new service that will help users make better plugin decisions and assist developers in writing better code.

XWP’s marketing manager Rob Stinson summarized the project’s direction so far:

Tide is a service, consisting of an API, Audit Server, and Sync Server, working in tandem to run a series of automated tests against the WordPress.org plugin and theme directories. Through the Tide plugin, the results of these tests are delivered as an aggregated score in the WordPress admin that represents the overall code quality of the plugin or theme. A comprehensive report is generated, equipping developers to better understand how they can increase the quality of their code.

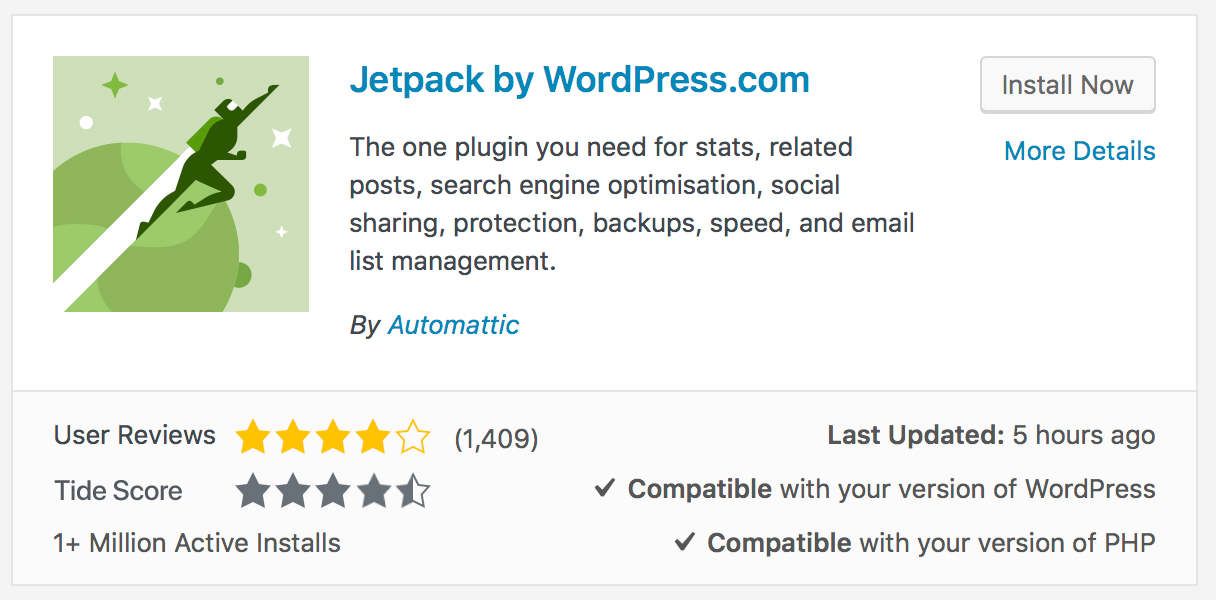

The XWP announcement also included a screenshot of how this data might be presented in the WordPress plugin directory:

XWP plans to unveil the service at WordCamp US in Nashville at the Google booth where they will be inviting the community to get involved. Naturally, a project with the potential to have this much impact on the plugin ecosystem raises many questions about who is behind the vision and what kind of metrics will be used.

I contacted Rob Stinson and Luke Carbis at XWP, who are both contributors to the project, to get an inside look at how it started and where they anticipate it going.

“Tide was started at XWP about 12 months ago when one of our service teams pulled together the idea, followed up by a proof of concept, of a tool that ran a series of code quality tests against a package of code (WordPress plugin) and returned the results via an API,” Stinson said. “We shortly after came up with the name Tide, inspired by the proverb ‘A rising tide lifts all boats,’ thinking that if a tool like this could lower the barrier of entry to good quality code for enough developers, it could lift the quality of code across the whole WordPress ecosystem.”

Stinson said XWP ramped up its efforts on Tide during the last few months after beginning to see its potential and sharing the vision with partners.

“Google, Automattic and WP Engine have all helped resource (funds, infrastructure, developer time, advice etc) the project recently as well,” Stinson said. “Their support has really helped us build momentum. Google have been a big part of this since about August. We had been working with them on other projects and when we shared with them the vision for Tide, they loved it and saw how in line it is with the vision they have for a better performant web.”

The Tide service is not currently active but a beta version will launch at WordCamp US with a WordPress plugin to follow shortly thereafter. Stinson said the team designed the first version to present the possibilities of Tide and encourage feedback and contribution from the community.

“We realize that Tide will be its best if its open sourced,” he said. “There are many moving parts to it and we recognize that the larger the input from the community, the better it will represent and solve the needs of the community around code quality.”

At this phase of the project, nothing has been set in stone. The Tide team is continuing to experiment with different ways of making the plugin audit data available, as well as refining how that data is weighed when delivering a Tide score.

“The star rating is just an idea we have been playing with,” Stinson said. “The purpose of it will be to aggregate the full report that is produced by Tide into a simple and easy to understand metric that WordPress users can refer to when making decisions about plugins and themes. We know we haven’t got this metric and how it is displayed quite right. We’ve had some great feedback from the community already.”

The service is not just designed to output scores but also to make it easy for developers to identify weaknesses in their code and learn how to fix them.

“Lowering the barrier of entry to writing good code was the original inspiration for the idea,” Stinson said.

Tide Project Team Plans to Refine Metrics Used for Audit Score based on Community Feedback

The Tide project website, wptide.org, will launch at WordCamp US and will provide developers with scores, including specifics like line numbers and descriptions of failed sniffs. Plugin developers will be able to use the site to improve their code and WordPress users will be able to quickly check the quality of a plugin. XWP product manager Luke Carbis explained how the Tide score is currently calculated.

“Right now, Tide runs a series of code sniffs across a plugin / theme, takes the results, applies some weighting (potential security issues are more important than tabs vs. spaces), and then averages the results per line of code,” Carbis said. “The output of this is a score out of 100, which is a great indicator of the quality of a plugin or theme. The ‘algorithm’ that determines the score is basically just a series of weightings.”

The weightings the service is currently using were selected as a starting point, but Carbis said the team hopes the WordPress community will help them to refine it.

“If it makes sense, maybe one day this score could be surfaced in the WordPress admin (on the add new plugin page),” Carbis said. “Or maybe it could influence the search results (higher rated plugins ranked first). Or maybe it just stays on wptide.org. That’s really up to the community to decide.”

In addition to running codesniffs, the Tide service will run two other scans. A Lighthouse scan, using Google’s open-source, automated tool for improving the quality of web pages, will be performed on themes, which Carbis says is a “huge technological accomplishment.”

“For every theme in the directory, we’re spinning up a temporary WordPress install, and running a Lighthouse audit in a headless chrome instance,” Carbis said. “This means we get a detailed report of the theme’s front end output quality, not just the code that powers it.”

The second scan Tide will perform measures PHP compatibility and will apply to both plugins and themes.

“Tide can tell which versions of PHP a plugin or theme will work with,” Carbis said. “For users, this means we could potentially hide results that we know won’t work with their WordPress install (or at least show a warning). For hosts, this means they can easily check the PHP compatibility before upgrading an install to PHP 7 (we think this will cause many more installs to be upgraded – the net effect being a noticeable speed increase, which we find really exciting and motivating).”

Carbis said that the team is currently working in the short term to get the PHP Compatibility piece into the WordPress.org API, which he says could start influencing search results without any changes to WordPress core.

“We’d also like to start engaging with the community to find out whether surfacing a Code Quality score to WordPress users is helpful, and if it is, what does that look like? (e.g. score out of 100, 5 star rating, A/B/C/D, etc.),” Carbis said. “We will release our suggestion for what this could look like as a plugin shortly after WordCamp US.”

More specific information about the metrics Tide is currently using and how it applies to plugins and themes will be available after the service launches in beta. If you are attending WordCamp US and have some suggestions or feedback to offer the team, make sure to stop by the Google sponsorship booth.

This looks very interesting. Would be good if it also checks against WPCS